As I continue to dive deeper into the research related to professional development (PD) and adult learning initiatives within higher education, one aspect of PD I’ve yet to explore is the evaluation of PD. In other words, how do we determine if a PD enterprise was successful? Is the learning having an ongoing, meaningful impact in the workplace? Did it make a difference?

In order to answer these questions, we must step back to think about two things: 1) what do we mean by ‘success’ in relation to PD (in other words, what particular indicators should we pay attention to), and 2) how/when should we gather data related to those indicators?

As an entry point to this investigation, Guskey (2002) does an excellent job of pointing our attention to key indicators of effective PD and adult learning, as well as possible ways of gathering data related to those indicators. These indicators (or “levels of evaluation”) are applicable for higher education instructors just as much as they are for K-12 teachers. Accordingly, Five Possible Indicators of PD Effectiveness—as I am referring to them—are summarized below:

| Indicator | What’s measured? | How will data be gathered? |

| Participants’ Reactions | Did participants enjoy the learning experience? Did they trust the expertise of those teaching/leading? Is the learning target perceived as relevant/useful? Was the delivery format appropriate/comfortable? | Exit Questionnaire, informal narrative feedback from attendees |

| Participants’ Learning | Did participants acquire new knowledge/skills? Did the learning experience meet objectives? Was the learning relevant to current needs? | Participant reflections, portfolios constructed during the learning experience, demonstrations, simulations, etc. |

| Organization Support/Change | Was the support for the learning public and overt? Were the necessary resources for implementation provided? Were successes recognized/shared? Was the larger organization impacted? | Structured interviews with participants, follow-up meetings or check-ins related to the PD, administrative records, increased access to needed resources for implementation |

| Participants’ Use of New Knowledge/Skills | Did participants effectively apply the new knowledge/skills? Is the impact ongoing? Were participants’ beliefs changed as a result of the PD? | Surveys, instructor reflections, classroom observations, professional portfolios |

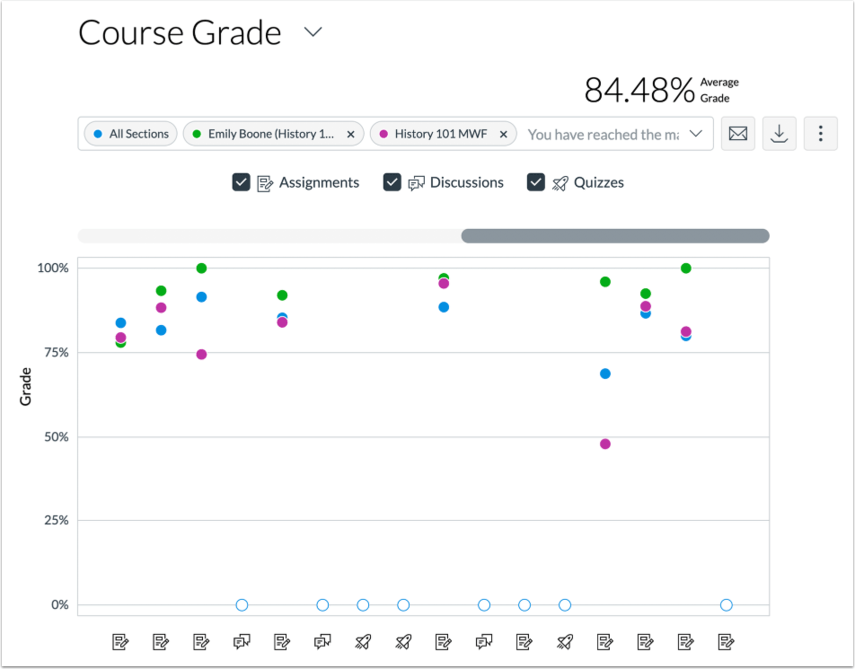

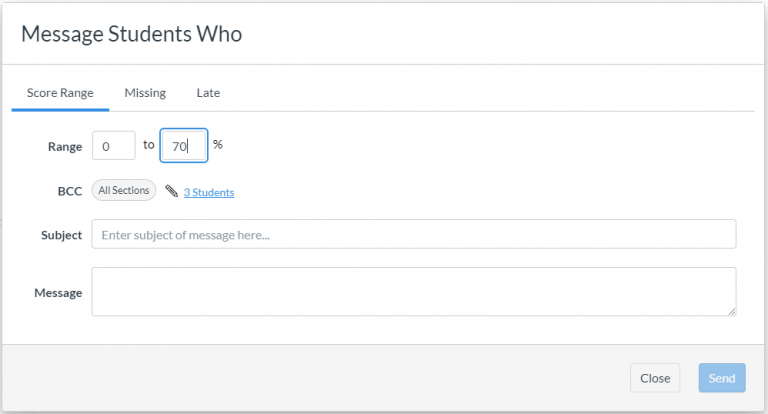

| Student Learning Outcomes | What was the impact on student academic performance? Are students more confident and/or independent as learners? Did it influence students’ well-being? | Either qualitative (e.g. student interviews, teacher observations) or quantitative (e.g. assignments/assessments) improvements in student output/performance, behaviors, or attitudes |

It is important to note that these indicators often work on different timelines and will be utilized at different stages in PD evaluation, but they should also be considered in concert with one another as much as possible (Guskey, 2002). For example, data about participants’ reactions to PD can be collected immediately and is an easy first step towards evaluating the effectiveness of PD, but participants’ initial reactions as reflected in an exit survey, for example, certainly won’t paint the whole picture. Student learning outcomes are another indicator to consider, but this indicator will not be able to be measured right away and will require time and follow-up well beyond the initial PD activity or workshop. Furthermore, it can be harmful to place too much evaluative emphasis on any single indicator. If student learning outcomes are the primary measure taken into consideration, this puts unfair pressure on the “performance” aspect of learning (e.g. assessments) and ignores other vital evidence such as changed attitudes or beliefs on the part of the teacher or the role of context and applicability in the learning:

“…local action research projects, led by practitioners in collaboration with community members and framed around issues of authentic social concern, are emerging as a useful framework for supporting authentic professional learning.”

(Webster-Wright, 2009, p.727)

In most instances, PD evaluation may consist entirely of exit surveys or participant reflections shortly after they complete a workshop or learning activity, and very little follow-up (e.g. classroom observations, release time for collaboration using learned skills) occurs into the future (Lawless & Pellegrino, 2007). This does nothing to ensure that professional learning is truly being integrated in a way that has meaningful, ongoing impact. In fact, in their 2011 study dedicated to evaluating faculty professional development programs in higher education, Ebert-May et al. (2011) found that 89% of faculty who participated in a student-centered learning workshop self-reported making changes to their lecture-based teaching practices. When considered by itself, this feedback might lead some to conclude that the PD initiative was, in fact, effective. However, when these same instructors were observed in action in the months (and years!) following their professional learning workshop, 75% of faculty attendees had in fact made no perceptible changes to their teacher-centered, lecture-based teaching approach, demonstrating “…a clear disconnect between faculty’s perceptions of their teaching and their actual practices” (Ebert-May et al., 2011). Participants’ initial reactions and self-evaluations can’t be considered in isolation. Organizational support, evidence of changed practice, and impact on student learning (both from an academic and ‘well-being’ perspective) must be considered as well. Consequently, we might reasonably conclude that one-off PD workshops with little to no follow-up beyond initial training will hardly ever be “effective.”

It is also worth mentioning here that the need for PD specifically in relation to technology integration has been on the rise over the last two decades, and this need has accelerated even more during the pandemic. In recent years the federal government has invested in a number of initiatives meant to ensure that schools—especially K-12 institutions—keep pace with technology developments (Lawless & Pellegrino, 2007). These initiatives include training the next generation of teachers to use technology in their classrooms and retraining the current teacher workforce in the use of tech-based instructional tactics (Lawless & Pellegrino, 2007). With technology integration so often in the forefront of PD initiatives, it begs the question: should tech-centered PD be evaluated differently than other PD enterprises?

I would argue no. In a comprehensive and systematic literature review of how technology use in education has been evaluated in the 21st century, Lai & Bower (2019) found that the evaluation of learning technology use tends to focus on eight themes or criteria:

- Learning outcomes: academic performance, cognitive load, skill development

- Affective Elements: motivation, enjoyment, attitudes, beliefs, self-efficacy

- Behaviors: participation, interaction, collaboration, self-reflection

- Design: course quality, course structure, course content

- Technology Elements: accessibility, usefulness, ease of adoption

- Pedagogy: teaching quality/credibility, feedback

- Presence: social presence, community

- Institutional Environment: policy, organizational support, resource provision, learning environment

It seems to me that these eight foci could all easily find their way into the adapted table of indicators I’ve provided above. Perhaps the only nuance to this list is an “extra” focus on the functionality, accessibility, and usefulness of technology tools as they apply to both the learning process and learning objectives. Otherwise, it seems to me that Lai & Bower’s (2019) evaluative themes align quite well with the five indicators of PD effectiveness adapted from Guskey (2002), such that the five indicators might be used to frame PD evaluation in all kinds of settings, including the tech-heavy professional learning occurring in the wake of COVID-19.

References:

Ebert-May, D., Derting, T. L., Hodder, J., Momsen, J. L., Long, T. M., & Jardeleza, S. E. (2011). What we say is not what we do: Effective evaluation of faculty professional development programs. BioScience, 61(7), 550-558. https://academic.oup.com/bioscience/article/61/7/550/266257?login=true

Guskey, T. R. (2002). Does it make a difference? Evaluating professional development. Educational leadership, 59(6), 45. https://uknowledge.uky.edu/cgi/viewcontent.cgi?article=1005&context=edp_facpub

Lai, J.W.M. & Bower, M. (2019). How is the use of technology in education evaluated? A systematic review. Computers in Education 133, 27-42. https://doi.org/10.1016/j.compedu.2019.01.010

Lawless, K. A., & Pellegrino, J. W. (2007). Professional development in integrating technology into teaching and learning: Knowns, unknowns, and ways to pursue better questions and answers. Review of Educational Research, 77(4), 575-614.https://journals.sagepub.com/doi/full/10.3102/0034654307309921

Webster-Wright, A. (2009). Reframing professional development through understanding authentic professional learning. Review of Educational Research, 79(2), 702-739.https://journals.sagepub.com/doi/full/10.3102/0034654308330970